From Spark to Strategy: Defining Core Value

A coffee-shop insight became our rallying idea: fix a small, painful workflow so users reclaim time. We turned that spark into a guiding promise for every decision.

Research followed: interviews, task mapping, and quick prototypes tested assumptions. We measured qualitative delight and time-on-task to know if users would actually recieve the benefit.

We translated value into measurable hypotheses and prioritized features by impact and effort. Quick experiments defined MVP scope:

| Value | Metric |

|---|---|

| Save time | Minutes per session |

That focus kept the roadmap lean and made testing regular: short releases, user feedback loops, and analytics. Lessons shaped product tone and onboarding to improve first-run experiance and long-term retention. Weekly metrics informed decisions and small tactical shifts.

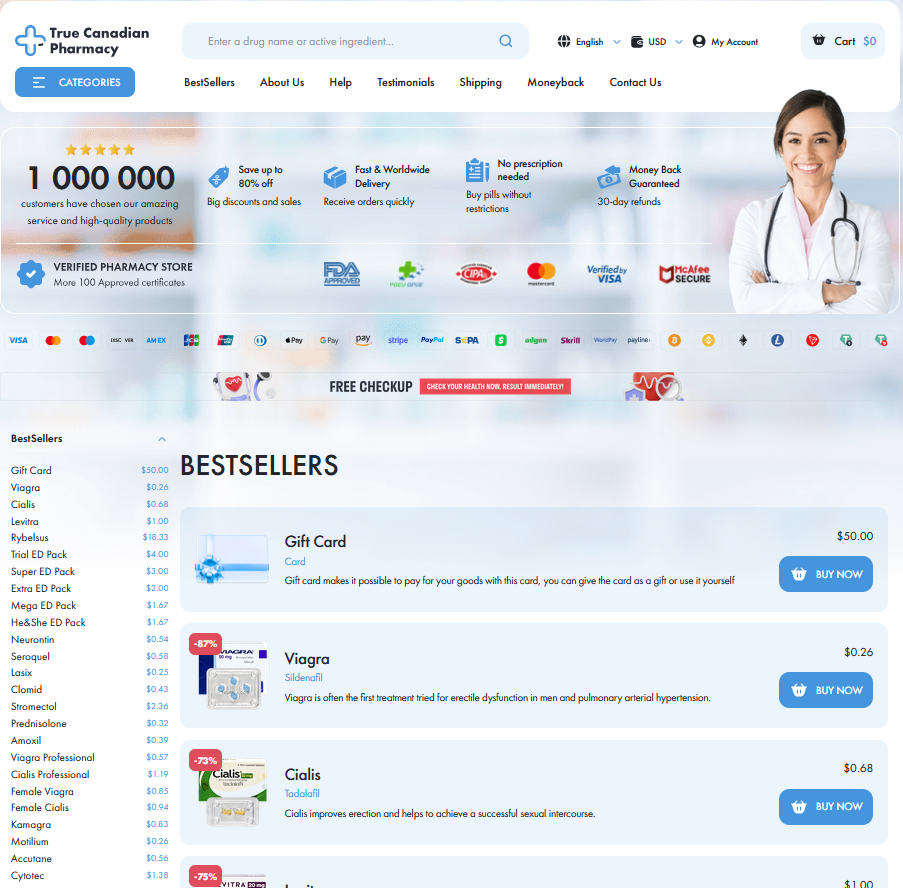

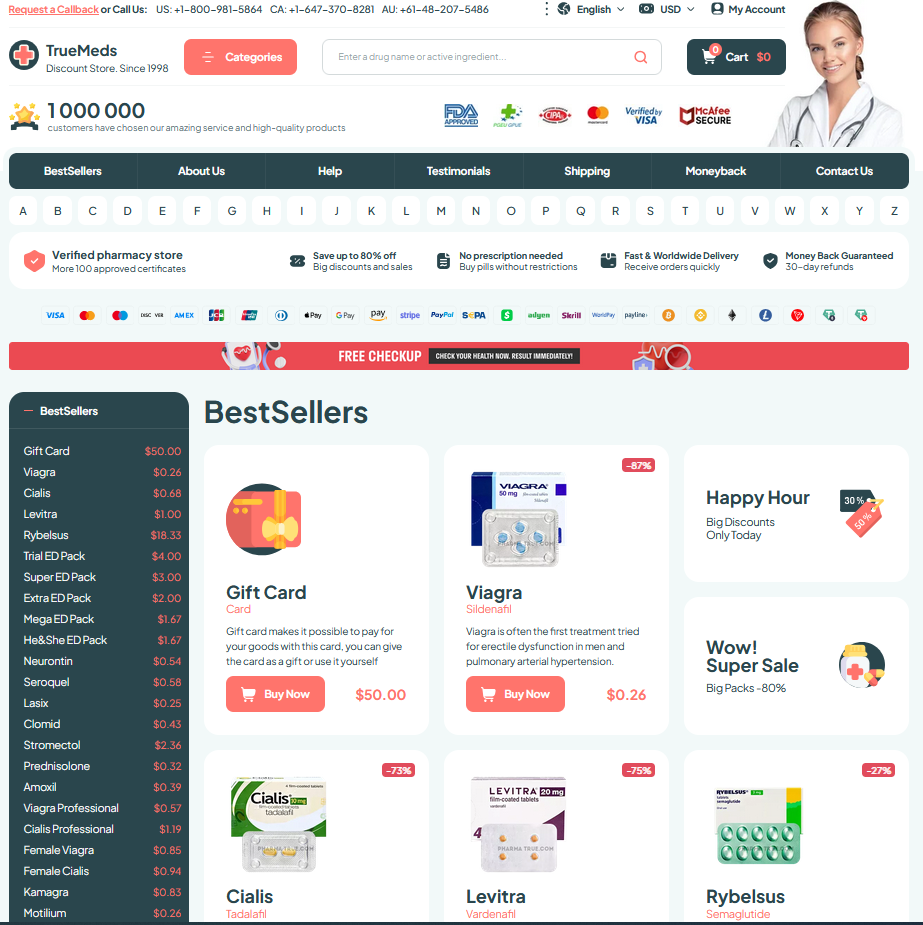

Market Trenches: Research, Validation, and Competitor Mapping

A small insight sparked a disciplined research plan for iverjohn, shifting vague hopes into measurable aims. Early surveys and analytics trimmed assumptions and focused the team.

Teh team interviewed users, mapped pain points, and quantified opportunity size to validate demand. Prototype tests measured conversion drivers and acceptable tradeoffs.

Competitor mapping exposed gaps: pricing blindspots, feature fatigue, and unmet niches that informed product differentiation.

Synthesis produced hypotheses, prioritized experiments, and a roadmap for quick tests; results shaped design and go-to-market choices. It cut risk and sped confident choices across teams rapidly.

Design Sprint: Prototyping User Flows and Visuals

A small cross-functional team at iverjohn ran timeboxed workshops to turn assumptions into mapped journeys, prioritizing core tasks and deciding success metrics before any pixels were drawn.

Sketches and low-fidelity screens helped reveal edge cases; quick clickable prototypes enabled early usability tests with real users, exposing friction and guiding feature scope with evidence.

Visual experiments established hierarchy, motion cues, and a component system that designers and engineers could reuse. Accessibility checks were run, and feedback loops were documented; Occassionally we pivoted.

Final deliverables included annotated flows, high-fidelity screens, and a prioritized backlog for development. The sprint collapsed risk, shortened timelines, and left iverjohn with actionable design artifacts. Teams set KPIs and ownership for iteration continuity.

Build and Iterate: Engineering, Testing, and Feedback Loops

We translated early ideas into a pragmatic codebase, choosing modular architecture and a strict MVP scope. Engineers at iverjohn adopted continuous integration, feature flags, and automated tests to keep risk low while shipping fast. Telemetry and release notes documented decisions for cross-team learning and retrospectives regularly.

Quality assurance mixed automated suites with exploratory sessions and staged rollouts. Usability tests highlighted surprising pain points, and metrics guided triage so fixes were prioritized by impact rather than instinct.

Customer feedback and telemetry closed the loop: weekly demos, rapid A/B experiments, and clear bug triage ensured each sprint delivered measurable improvements. Teh mindset was ruthless prioritization and empathy for users, which helped the team evolve the product responsively.

Launch Playbook: Marketing, Sales, and Onboarding Mechanics

In the final sprint we turned hypotheses into a tangible go-to-market script, aligning audience segments with launch timing and budget. Iverjohn's team mapped core messages to channels, prioritizing experiments that could scale fast.

Teh marketing mix blended paid acquisition and content seeding; every channel had KPIs and a rapid A/B cadence.

| Channel | KPI |

|---|---|

| Activation |

Onboarding was treated as product led conversion: welcome journeys, contextual tips and checklists reduced churn and educated users. Product analytics fed back into content updates and support scripts so learning was continually improved and faster.

Metrics focused on activation, trial-to-paid conversion and NPS; weekly reviews let teams pivot messaging or pricing. Growth experiments like referral loops and onboarding optimizations framed the next roadmap, balancing revenue with long term retention and iverjohn

Impact Metrics: Growth, Retention, and Actionable Learnings

We tracked adoption curves from beta testers to power users, mapping channels that sparked initial spikes and those that sustained momentum. Storytelling metrics turned vague intuition into measurable milestones, giving the team clear north stars.

Retention analysis revealed where value was delivered: onboarding walkthroughs, friction points in the core flow, and feature stickiness. We segmented cohorts to uncover who returned after seven, thirty, and ninety days and why they stuck.

Testing A/B variations and NPS surveys created a feedback loop that informed rapid pivots. Qualitative interviews explained quantitative blips, and Occassionally a single insight produced a product change that boosted week-over-week engagement and conversion rates.

We turned those learnings into KPIs, tightening experiments and resource allocation. The roadmap was reprioritized around proven levers, reducing churn and accelerating revenue while keeping qualitative context central to future decisions and measuring long-term impact. Google Scholar: Iverjohn PubMed: Iverjohn